S 3.90 General requirements for centralised logging

Initiation responsibility: IT Security Officer, Head of IT

Implementation responsibility: Administrator

Most IT systems within an information system can be configured in such a way that they generate logged data about different events such as file accesses. This data contains important information helpful in determining and finding hardware and software problems, as well as resource bottlenecks. Furthermore, logged data is also used in order to detect security problems and attacks. In order to get a complete overview of the information system, a centralised logging server can be used which merges, analysed, and monitors the different logged data.

Structure of log files

As a matter of principle, every log file always contains the date and time as central information along with the captured events. Depending on the system generating the log, these may be structured differently. Time and date are of particular importance for centralised logging (see S 4.227 Use of a local NTP server for time synchronisation).

Centralisation

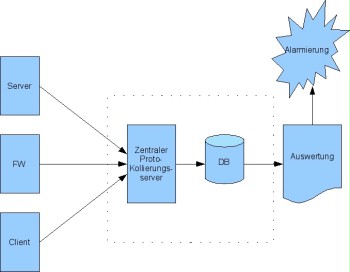

In order to increase the clarity and to be able to further process the collected data more easily, the logged data of all components involved is often transmitted to a centralised server using a secure channel. If a centralised logging server is used, it must have sufficient memory capacity in order to be able to save the log messages of the information system.

Figure: Basic structure of centralised logging

In order to transmit status, error, alarm, and other messages from servers and network components to the logging server, syslog may be used, for example. On the one hand, syslog is the name of the log and, on the other hand, the name of the program used to generate, receive, forward, or save event messages. Syslog messages are always transmitted in clear text. The log messages are transmitted through the network in an encrypted manner only due to tunnelling via SSL or SSH.

It is not necessary to collect and then analyse every single possible log message. Often, different log files contain identical information and therefore provide the same context which indicates a certain event. Therefore, redundant data is summarised to become one record in order to reduce the amount of logged information (aggregation). Here, the challenge is the previously required normalisation of the different formats the logged data is available in.

Normalisation

The combined, different messages must be converted to a uniform format for later analysis (normalisation), since there is no uniform standard for format and transmission protocol. The different log formats such as syslog, Microsoft Eventlog, SNMP, Netflow, or IPFIX can be adapted to each other and then analysed by means of the normalisation. Normalisation can be performed with the help of a simple script or using complex applications.

Aggregation

The next step for pre-processing is aggregation. Here, log messages with identical content are combined to become one record. Often, identical log messages are generated several times by the same system, which means a low information value for the following messages. For this reason, only the first log file is processed further. However, it is important to complement the first log message by the number of occurred redundant events in order to be able to determine the frequency of the identical log messages.

Filtering

Along with normalisation and aggregation, a filter function is also required for reasonable centralised logging. Filtering allows for discarding and excluding from the further processing procedure data irrelevant to the respective purpose as early as possible. Above all, the logged data of the security-relevant IT systems is of interest. The application logged data intended for monitoring the proper use of the respective applications is only considered afterwards. On the other hand, regularly queried monitoring information regarding the operation of the systems is relevant from an availability point of view. This includes the availability of the systems using the network or error messages from the operating systems indicating problems.

Analysis

The objective of analysing the logged data is to be able to detect problems during IT operation and attacks to an IT system as quickly as possible. For this, the components must be monitored in real time. Along with the security events and errors, the analysis also provides information about the current utilisation. When analysing logged data, meaningful representation of the results in a powerful user interface and the support when developing reports must be focused on. For the most systems, events are found automatically, but a statement of whether there is a real attack should be confirmed by an administrator. For this task, the administrators must be trained sufficiently and must be supported by reasonable analysis systems.

Alarm

Collected logged data can support an IT early-warning system regarding the task of monitoring existing procedures and data flows and of providing an interface for triggering alarms. In the event of important events, it should be possible to trigger alarms using different types of notification such as email or SMS. In order to trigger the alarms in a reasonable manner, it is important to reduce the number of false alarms. In this case, an important aspect is to realistically configure the thresholds and to adapt the thresholds to the circumstances of the information system.

Archiving

If logged data is to be stored permanently, it must be checked which legal or contractual retention periods are applicable. A minimum retention time may be specified in order to guarantee the ability to trace all activities, and a deletion requirement may apply due to data protection regulations (see also S 2.110 Data protection guidelines for logging procedures).