S 4.430 Analysing the logged data

Initiation responsibility: IT Security Officer, Head of IT

Implementation responsibility: Administrator

Normally, a large amount of log files is generated within an information system.

Before the log entries can be analysed, the data must be normalised. Normalisation ensures that the different data formats of the log-generating systems are converted to a uniform format. Before analysis it is furthermore important that the relevant data is limited in order to reduce the amount of logged data. This is performed with the help of filter options, aggregation, and correlation of the data (see S 4.431 Selecting and processing relevant information for logging). These safeguards are particularly important when logging is performed centrally.

Time synchronisation is another important aspect regarding the analysis of the logged data. In order to be able to identify attacks or malfunctions across several IT systems and applications, an identical time should be set on every system. Central time servers can be used in order to ensure that all systems have the same time even in a large information system (see also S 5.172 Secure time synchronisation for centralised logging). These servers provide the system time using the Network Time Protocol (NTP), for example (see S 4.227 Use of a local NTP server for time synchronisation). All further systems in the information system can be synchronised with this time.

For an alarm function, the logged information must be analysed promptly. During analysis, security-critical events are considered without any delay. Additionally, relevant data from already existing log files is extracted and used for the analysis. The analysis must particularly focus on deviations from the normal behaviour, configuration errors, and error messages in order to gain an overview of all relevant events within an information system.

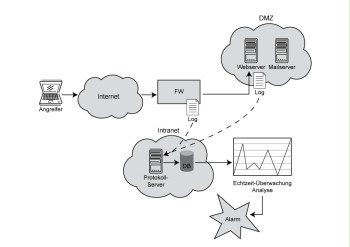

In order to be able to promptly identify a relevant log entry, it is possible to use suitable algorithms and analysis technologies such as signature identification and threshold analysis. These technologies are often used by IT early-warning systems. As soon as an attack is detected, an alarm should be triggered so that immediate intervention against the threat is possible.

Figure: Basic procedure for an IT early-warning system

In order to be able to comprehend the events and the log entries for a possible retention of evidence, a report should be drawn up upon analysis. Many logging applications offer a web interface in order to also represent the analysis result graphically. This way, possible trends can be better identified. The web interface can also be used to define any analysis views and filters.

If the logged data is analysed centrally, it is possible to identify complex relations within the information system and to browse for operation or security incidents within the information system. Therefore, the logged data should be archived for future analyses. Along with the internal requirements regarding the retention period, it must be checked in advance which legal or contractual retention periods are applicable to log files. A minimum retention time may be specified in order to guarantee the ability to trace all activities, and a deletion requirement may apply due to data protection regulations (see also S 2.110 Data protection guidelines for logging procedures).

Review questions:

- Is the data normalised before analysis?

- Is the information system operated in a time synchronised manner?

- Is a report drawn up after the logged data was analysed?

- Is the logged data archived for future analyses?

- Are legal provisions taken into consideration when archiving the logged data?